Knowledge and Knowing in the AI Era

Cheap knowledge changes everything

Will AI take my job? Will my Universal Basic Income be enough for me to pursue my dreams? Or does the future of work look so grim that even the robots will be quiet quitting?

Everyone is speculating about the future of life and work in the AI era. Some people seem quite self-assured and certain about their predictions, whether taking an optimistic stance on the removal of manual drudgery, medical and scientific breakthroughs, and an abundance for all - while others are equally convinced of the pessimistic stance, a further dumbing down of the populace as we outsource our thinking to machines with overbearing control over the minutiae of everyday life amidst the encroaching Technocracy.

Join our LinkedIn Live Event, Tues 25th Feb 2:00 PM GMT

Creating Human-Centric Cultures in a Challenging Climate

E.M Forster’s 1909 novella, “The Machine Stops”, paints a grim picture of humanity's dependency on technology as people become so reliant on an all-encompassing Machine that they lose the ability to think, move, or even live independently. When the Machine eventually fails, society collapses because no one remembers how to survive without it.

“We created the Machine, to do our will, but we cannot make it do our will now. It has robbed us of the sense of space and of the sense of touch, it has blurred every human relation and narrowed down love to a carnal act, it has paralysed our bodies and our wills, and now it compels us to worship it.”

- E. M. Forster, The Machine Stops

Forster’s warning feels eerily relevant today - what happens when we stop questioning, stop learning, stop relating, and stop ‘knowing’ for ourselves? Do we become passive consumers of machine-generated knowledge, unable to discern truth from noise? Or do we find a way to use AI as a tool for the enlightenment of humanity rather than a crutch, ensuring that human wisdom remains at the core of our everyday being?

It feels somewhat ironic that I’m typing these words into my machine, every keystroke synchronously liaising with the Google Docs interface, electronically etching my thoughts into sequences of binary pulses - all quietly overseen by the big machines in the cloud.

I don’t know if we’re heading for utopia or dystopia, but my intuition is that life could be very difficult in the short term - further political upheaval, further job displacement, and the collapse of institutions and possibly our economic system as the Metacrisis comes to a head - the collision of exponentiating system crisis with exponentiating technical progress will be a rough ride. But humans are adaptable and hopefully, we’ll come to our senses and figure things out. My long-term view is more optimistic and more aligned with Kevin Kelly’s concept of a pantopia, an emergent state of slow and gradual improvement.

Just as in pre-internet days, we would not have conceived of a President Trump meme coin, unboxing videos, ‘mukbang’, and the global influence of the ‘Hawk Tuah’ girl, so we can’t fully anticipate the social and psychological implications of rapidly advancing technologies.

“The real problem of humanity is the following: We have Paleolithic emotions, medieval institutions and godlike technology. And it is terrifically dangerous, and it is now approaching a point of crisis overall.”

- Edward O. Wilson

The NVIDIA panic is just for starters

The NVIDIA stock sell-off following the irruption of DeepSeek into the LLM foray foretells a reactionary panic amidst the tumultuous changes ahead, as organisations finally put into practice the ‘Agility’ they have long claimed to embrace.

While LLMs (Large Language Models) are making already productive engineers even more efficient, they are also displacing many simpler tasks that traditionally served as entry points for junior engineers. Additionally, they are reducing the need for some technical authoring and automated test generation. The business model of offshore organisations built around low-cost, low-skill tasks will face growing challenges to its viability. Already experienced and accomplished engineers are using AI tools to massively enhance their productivity - the fabled 10x engineer may soon be replaced by the 100x rockstar. It is clear that AI will reshape the software industry, but in ways we can only begin to envision right now.

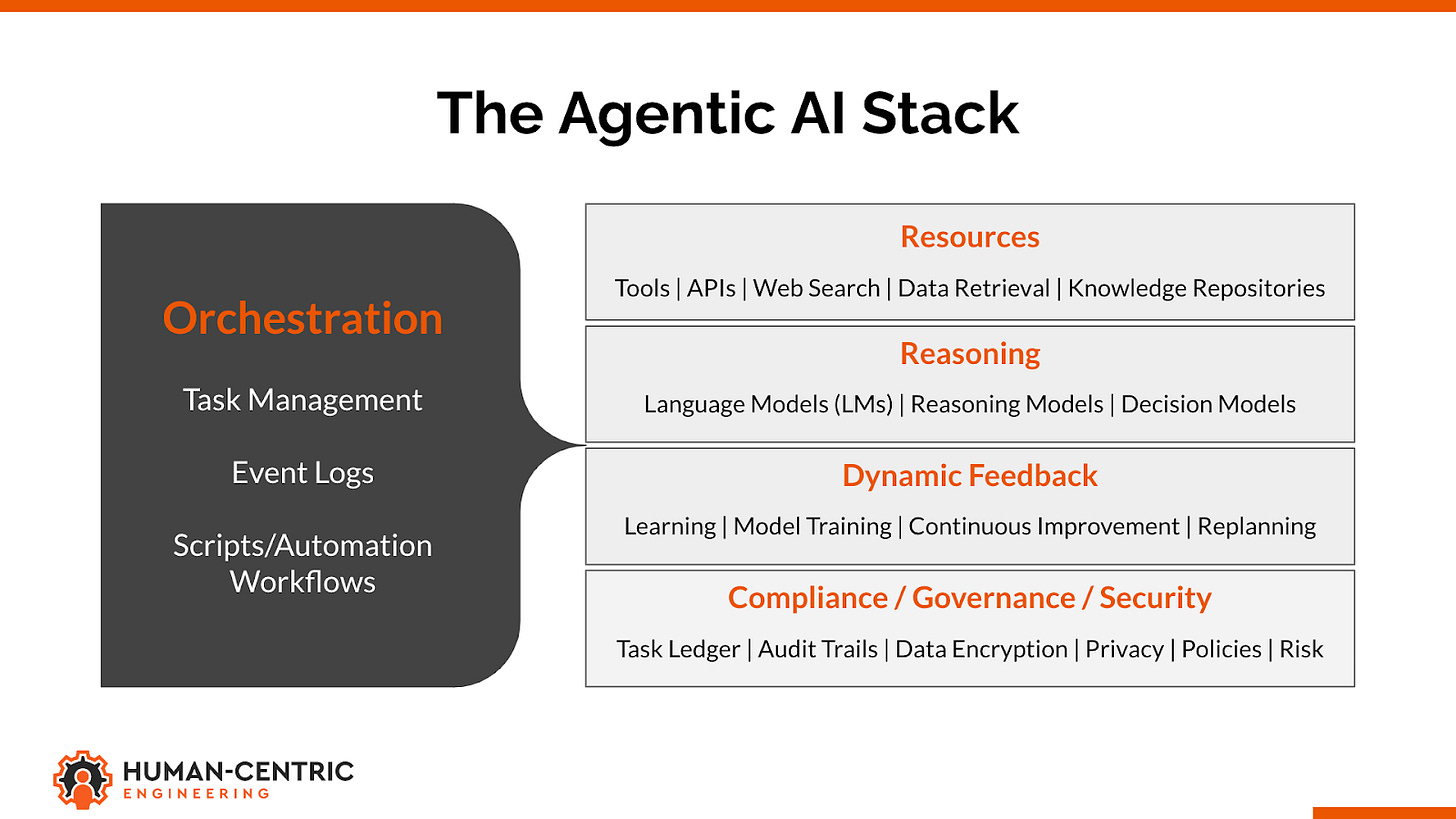

We have only begun to scratch the surface of the potential of Agentic AI - systems where LMs (both large and small) are integrated with reasoning engines, knowledge bases, APIs, and other resources to operate autonomously. Agentic AI will not be assisting from the sidelines like current LLMs but be making autonomous decisions, executing tasks, and adapting dynamically, not only transforming software development but entire business processes. Perhaps it’s not too far-fetched to imagine teams of AI Agents working alongside, and outperforming, human teams.

While opinion is divided among software engineers on how far Agentic AI will encroach on their profession, the picture is unlikely to be a black-and-white ‘all or nothing’, more likely is a begrudging acceptance that there’ll be significant pressure to delegate a certain proportion of our work to the machines.

AI Agentic Autonomy & Corporate Fragmentation

Autonomous AI Agents could break the economies of scale that currently benefit large organisations. Large corporations may be broken up into small highly networked autonomous nodes of intelligence which can be used on a fractional basis - providing decentralised services to the highest bidder as needed in real-time, facilitated and incentivised via streaming micropayments.

Value would then be delivered by a process of discovery rather than pre-determined flows. Every automated agentic component competing in a free market where its viability accords with its efficiency in delivering value.

The future is a fiction, a story in our imaginations and often the source of existential anxiety. We can’t predict exactly how the tech industry and the broader corporate ecosystem will change as the AI wrecking ball swings through, but adaptability will be key to thriving.

What’s good for humans, will be good for the machines

Just as AI in the hands of experienced engineers is bringing them massive productivity gains, so those organisations whose engineers are already set up with success and whose attitude is already nimbler, whose value delivery flows are already efficient, will gain the most from agentic AI.

Those organisations that are already focused on creating the conditions for humans to thrive will be the ones who will gain the greatest competitive advantage from the Agentic AI era.

The conditions needed for an orchestrated multi-agent system to thrive are not dissimilar to the conditions for human thriving - at the high level, this comes down to environments that enable mastery, autonomy, and purpose. What’s good for humans, will be good for the machines.

Mastery

Mastery involves the ability to develop skills and improve through practice, experimentation, feedback, and continuous learning:

Clear boundaries: Defining roles and responsibilities so agents (or individuals) know what to focus on and where their competencies apply.

Self-correcting mechanisms and tight feedback loops: Real-time adjustments based on performance, ensuring constant refinement and skill development.

Experimentation and continuous learning: Encouraging a growth mindset where agents or individuals are empowered to learn from trial and error, optimising their approach over time.

Task breakdown and competency-based assignment: Assigning tasks according to the strengths and competencies of the agents, allowing them to excel in areas where they can perform best.

Autonomy

Autonomy is about the freedom to make decisions and take action independently while still aligning with overall goals. It’s crucial for both human and machine systems to function optimally:

Dynamic replanning and adaption: The ability to adjust goals, strategies, and actions based on real-time data and changing conditions.

Reflexivity: An agent’s (or individual's) ability to reflect on their decisions and outcomes, adjusting future actions accordingly.

Accountability and task ledgers: Assigning clear ownership of tasks and domains, with traceability for accountability and learning.

Resources and Bottlenecks: As machines or individuals operate, any limitations - such as compute power or resource constraints - become obvious, ensuring that efforts can be directed toward resolving them and improving flow.

Purpose

Purpose is about understanding why we do what we do, the broader goal that guides actions and aligns efforts toward a shared outcome:

Clarity of purpose, role, and goal orientation: A clear understanding of what the collective aim is and how individual efforts contribute to the larger mission.

Alignment and cohesion: Ensuring that all agents or individuals are aligned toward the same objective, with cooperative synergy.

Clarity around the value stream: Recognising the flow of value and understanding how each step or action adds to the greater output, whether in human work or machine operation.

Ethical orientation: Machines directed by human ‘knowing’ of what is right, what is just, and what is good.

Ultimately, just as humans need an environment that encourages skill development, independent action, and alignment with meaningful goals, so too do multi-agent systems and machines acting as tools to fulfil human needs. The conditions for thriving - clear boundaries, feedback, accountability, and a shared ethical purpose - are foundational for both human and machine success.

who writes at , does a great job of articulating the organisational prerequisites for human thriving. In Geoff’s words we need “Organisations of innovation, agility, and adaptiveness where sense making, decision making, and action taking are tightly coupled, rapidly and repeatedly iterated, deeply embedded and widely distributed throughout the organisation.” - the conditions required for the thriving humans and their machines.Distinguishing Knowledge from Knowing

Many software engineers view the promise of multi-agent software development as an unrealistic fantasy. They’ve seen how poorly LLMs handle novel problems and how, in the wrong hands, they can introduce unnecessary complexity. While LLMs may perform well on tightly bounded, modular tasks, they often break down when confronted with the real-world interdependencies of enterprise software. Just as a hammer sees every problem as a nail, blindly trusting AI tools without discerning oversight and rigorous verification would be a mistake.

In the AI era, it's well worth musing on the distinction between 'knowledge' and 'knowing'.

Knowledge is the structuring of information so that it can be usefully applied to problems. Knowing brings the human faculties into play - the faculties of taste, intuition, ethics, discernment, and judgement. Knowing translates to value, the wise application of knowledge.

Knowledge is horizontal, whereas knowing involves vertical growth and personal development - the ability to transcend domains and paradigms and to connect the dots. Knowing is sensemaking and meaning-making.

“Information is not knowledge.

Knowledge is not wisdom.

Wisdom is not truth.

Truth is not beauty.

Beauty is not love.

Love is not music.

Music is THE BEST.”

- Frank Zappa

Just as beautiful music can inspire rapture, ‘knowing’ is the ineffable quintessence of human experience - an intuitive sense of awe that sings to our deepest values.

Adaptability in the AI era will mean creating opportunities for the emergence of knowing. More focus on developing the human faculties, an elevation of human awareness and consciousness applied to real-world challenges coupled with an openness to intersectionality, where ideas mingle and opportunity emerges.

Releasing our 'knowing' will rest on our ability to create more of the right kind of spaces for generative human interaction, relating, and interdisciplinary encounters. Spaces for reflexivity, spaces for personal and collective inquiry. Spaces for suspending assumptions - for doubt and dialogue - spaces that will support people in transcending their individual domains and integrating their individual perspectives into an ethical whole that is far greater than the sum of the parts.

Knowing will be prized in an AI era where knowledge is cheap. The challenge is for organisations and society as a whole to facilitate a process of transformative human knowing, to support the elevation of our human qualities such that we use our godlike technologies wisely - as masters of the technologies rather than slaves to the encroaching Technocracy. To optimise for humanity and our wider ecosystems.

“How dangerous is the acquirement of knowledge and how much happier that man is who believes his native town to be the world, than he who aspires to be greater than his nature will allow.”

- Mary Wollstonecraft Shelley, Frankenstein

The questions of what is right, what is ethical, and how we come to know what is right are ones we’ve grappled with since Plato argued that rulers should be philosophers. He believed that leaders should seek truth and challenge assumptions, rather than ruling through sheer power or self-interest. This idea highlights the challenge of questioning assumptions and moving out of our dark caves of ignorance. Much like Plato’s inquiry into the Tripartite Soul and the Ideal State, in our organisations and our use of technology, we must strive for alignment between Logos (reason and wisdom), Thymos (spirit and courage), and Epithymia (appetite and desire) for the emergence of justice. Ultimately, it comes down to good leadership - a self-reflective and contemplative approach with a ‘just’ sense of ‘knowing’ - going way beyond our programming capability and optimising the efficiency of our workflows and right to the heart of doing what’s right for individual and collective prosperity.

Combining Plato’s inquiries with the aforementioned observations of Edward O. Wilson, we need to temper our base desires and primal instincts, directing the energy of our courageous spirit to challenge and transform our outdated institutions. This requires developing a self-reflective ‘knowing’ of what is truly ‘just’ so that we can harness our ‘godlike’ technologies responsibly and orient them toward the prosperous flourishing of both people and planet.

"We have developed speed, but we have shut ourselves in. Machinery that gives abundance has left us in want. Our knowledge has made us cynical. Our cleverness, hard and unkind. We think too much and feel too little. More than machinery we need humanity. More than cleverness we need kindness and gentleness. Without these qualities, life will be violent and all will be lost…

...Don’t give yourselves to these unnatural men - machine men with machine minds and machine hearts! You are not machines! You are not cattle! You are men! You have the love of humanity in your hearts!..

...You, the people have the power - the power to create machines. The power to create happiness! You, the people, have the power to make this life free and beautiful, to make this life a wonderful adventure."

- Charlie Chaplin’s speech as The Great Dictator.

While discussing this article with my colleague, Simon Holmes, he suggested that perhaps part of the fear/hope that we can replace people and jobs with machines is because we treat people like machines. If we let people be people, who knows what we might be capable of?

Prometheus and tech

Ever since Prometheus stole the technology of fire from the gods, his gift to humanity, it has been incumbent upon us to use such power ethically, to apply our human ‘knowing’.

For his defiance, Prometheus was chained to a rock, condemned to have his liver devoured daily by an eagle - only for it to grow back overnight, ensuring his eternal torment. If AI goes rogue, what fate awaits the Silicon Valley gods? Perhaps, like Prometheus, they will suffer a daily torment - not from eagles, but from an AI-generated flood of deepfake apology videos for the unintended consequences of their inventions - a daily roasting from their own inventions.

If, as an engineer, you're unwilling to adapt to the upcoming changes, or as an organisation, unwilling to challenge your ingrained thinking, it’s time to pay attention - the Agentic AI paradigm is gaining traction, with or without you. Your growth will come from broadening your horizons, stepping beyond the comfort zone of specialisation, and nurturing your curiosity.

Developing software products is not just about writing code - or at least, not exclusively. Coding is a fraction of the broader process of creating a software product.

The development of software products is a deeply human endeavour:

Sense-making and meaning-making: Understanding the broader context, aligning on shared understanding, and extracting actionable insights.

Generating divergent ideas: Exploring multiple perspectives, innovative solutions, and alternative approaches.

Converging on solutions: Refining, validating, and selecting the most viable path forward.

Deconstructing challenges: Breaking down complex problems into manageable, solvable components.

Filtering out what’s irrelevant: Avoiding distractions and focusing on what truly delivers value.

Contending with the egoic ambitions of executives: Managing leadership expectations and balancing strategic vision with realistic execution.

Pushing back against unreasonable demands: Setting boundaries to ensure sustainable development and maintain technical integrity.

Balancing short-term delivery with long-term sustainability: Avoiding technical debt while ensuring business needs are met.

Navigating power structures and workplace dynamics: Understanding informal and formal networks to drive impact and alignment.

Translating business needs into technical realities: Bridging the gap between strategy and execution.

Enabling teamwork through loosely coupled, highly cohesive teams: Encouraging collaboration while maintaining autonomy.

Guiding engineers: Providing mentorship, technical leadership, and alignment with strategic goals.

Setting up tight feedback loops: Enabling continuous learning, adaptation, and quality improvement.

Solving critical roadblocks: Identifying and addressing obstacles that threaten progress.

Managing an organisation as an interconnected system: Recognising interdependencies and optimising for overall effectiveness.

Curating and managing knowledge effectively: Ensuring institutional memory, avoiding redundancy, and facilitating knowledge transfer.

Integrating ethics and societal impact considerations: Building technology responsibly and aligning with human values.

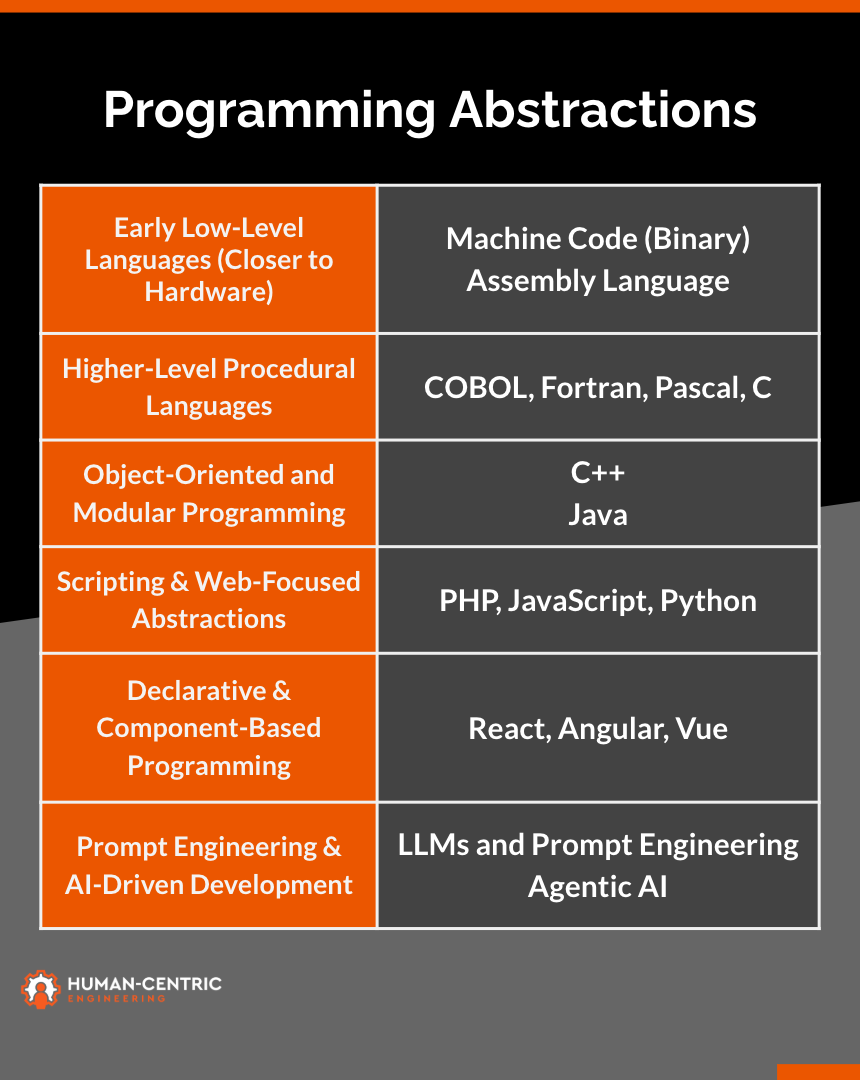

AI is not threatening software engineering, but it is yet another layer of abstraction in the programming stack that will threaten engineers who have settled for mediocrity. Only by zooming out with a willingness to reach out across functional boundaries, will you gain a deeper understanding of the business domains you translate into code with creative artistry and taste that only humans can muster, ultimately helping to architect the Agentic AI future instead of clinging to the familiar status quo.

If your career progression to date has relied heavily on being good at writing code it’s time to rethink your strategy, relinquish the identity you’ve built around your expertise, and be willing to adopt a beginner’s mind to approaching the broader aspects of software engineering. The landscape is changing rapidly, there will be winners and losers, and the pace of change will only increase.

Join our LinkedIn Live Event, Tues 25th Feb 2:00 PM GMT

Creating Human-Centric Cultures in a Challenging Climate

AI is indifferent to your opinion on whether it can code well, whether it will reshape the industry, or whether it is just an overhyped fad. It has the feel of inevitability, that the efficiency gains it promises will catch the attention of organisations whether we as developers approve or not.

The AI bubble will inevitably pop, the dust will settle, and the market will consolidate. However, no one truly knows which remnants of today’s software engineering landscape will endure. We can sit on the sidelines, bury our ostrich heads in the sand with denial, or we can learn to master it, to wield its power wisely, with the subtle sensitivities of our human ‘knowing’.

Is software engineering collaborative knowledge work? No, it’s collaborative ‘knowing’ work.

No one really knows where this is heading. It could turn out great, or it could be a complete mess. Right now, it looks like there’s a lot of chaos coming, and not everyone’s going to make it through in one piece.

I think about this day and night, it's always on the periphery of my vision, will I have enough time to make a decent retirement where I can live comfortably and enjoy the fruits of my labour? Or will I end up getting stuffed and not be able to find a job in 5-10 years.

I feel sorry for low skilled workers the most, now what used to be somewhat attainable like breaking into web development, etc.. is going to completely disappear.

I hope my primitive brain is just worried for nothing, I think at the very least like your allude, there's going to be tons of chaos, especially with the next GPT3 moment, and it'll gradually cause more job losses. The question is are we going to see a moment of critical mass where all of a sudden large swathes of the economy no longer need humans to function.

All we can do is sit and observe, the powers moving this are far greater than most can stop, the only thing we can do is, when the economy destroying advancements come, is hopefully the business owners will recognise if they replace their workers with AI, it just means a smaller economy, meaning less customers for everyone.

I just don't see UBI becoming a thing at the moment, where in the US for example they're stripping away the social security net, in the UK, people are receiving less and less.

I hope things will be alright.

The cynical karmic echo in me occasionally wonders if the incoming chaos is a debt to be repaid from the wonder years of being a software engineer. We had it so good for so long, there were minimal survival of the fittest type effects at play.

Now we’re being pitted against one another and those refusing to adapt will likely be left behind.

The optimist in me is excited for software engineering to be dosed with more human aspects than simply logic solutions. The pessimist worries about what will happen when the trajectory inevitably continues long past that point